Troubleshooting

-

The table below lists common issues and solutions for the VNG Cloud BlockStorage CSI Driver.

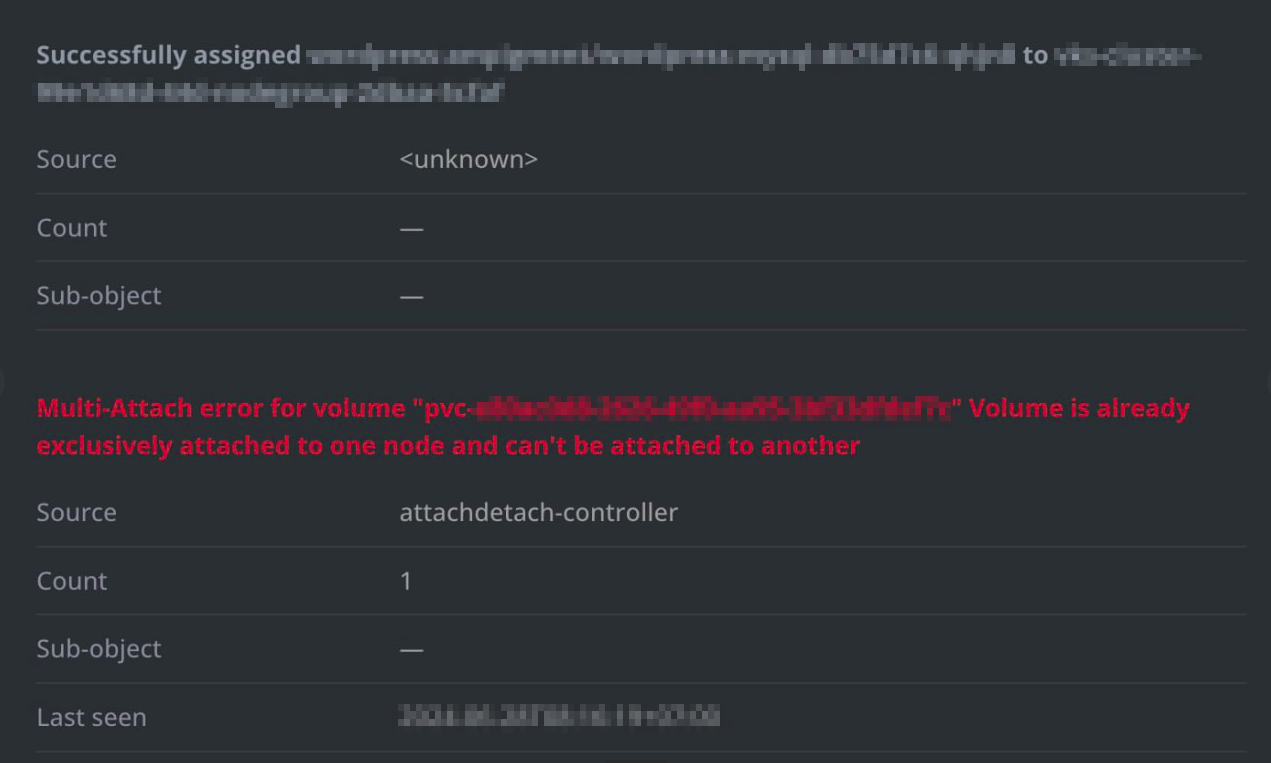

# Issue Solution Notes Issue 1 Multi-Attach error for volume "pvc-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" Volume is already exclusive attached to one node and can't be attached to another

Solutions

Issue 1

Reason

- The issue

Multi-Attach error for volume "pvc-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx" Volume is already exclusive attached to one node and can't be attached to anotherin Kubernetes indicates that a Persistent Volume Claim (PVC) is trying to be used by multiple pods on different nodes, but the volume is already attached to a specific node and CANNOT be attached to another node simultaneously.

Solutions

sol-csi-01

- Ensure Pods Using the Same PVC are Scheduled on the Same Node:

- You can use

nodeAffinityorpodAffinityto ensure that pods using the samePVCare scheduled on the same node. - Example with

nodeAffinity:apiVersion: apps/v1 kind: Deployment metadata: name: my-app spec: replicas: 1 selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app-container image: my-app:latest volumeMounts: - mountPath: /data name: my-volume volumes: - name: my-volume persistentVolumeClaim: claimName: my-pvc affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - node1

- You can use

sol-csi-02

- Delete and recreate the pod using the PVC:

- As a temporary workaround, if the volume is no longer needed on the old node, you can delete the pod and let Kubernetes reschedule it. Kubernetes will detach the volume from the old node and attach it to the new node.

- Example:

kubectl delete pod <pod-name>

sol-csi-03

- Scale down the deployment to 0 replicas and scale it back up:

- If you have multiple replicas of the same pod, you can scale down the deployment to 0 replicas and scale it back up. This will force Kubernetes to reschedule the pods and attach the volume to the new node. For example:

kubectl -n <namespace> scale deployment/<deployment_name> \ --replicas=0 - After the scaling down, scale the replica count back to the desired value:

kubectl -n <namespace> scale deployment/<deployment_name> \ --replicas=<desired_replica>

- If you have multiple replicas of the same pod, you can scale down the deployment to 0 replicas and scale it back up. This will force Kubernetes to reschedule the pods and attach the volume to the new node. For example:

sol-csi-04

- Sometime the

VolumeAttachmentresource is not deleted properly, you can delete theVolumeAttachmentresource manually:-

Get the

VolumeAttachmentresource:kubectl get volumeattachment -A | grep pvc-xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

-

Delete the

VolumeAttachmentresource:kubectl delete volumeattachment <volumeattachment-name> -

Delete the pod that is being stuck:

kubectl delete pod <pod-name>

-

sol-csi-05

- Change Strategy to

Recreate(IMPORTANT: Be aware that this strategy may lead to application downtime during the transition).- If you are using the

RollingUpdatestrategy, consider switching to theRecreatestrategy. This ensures that the old pod is terminated before the new one is initiated, thereby releasing the PVC volume. - Example:

apiVersion: apps/v1 kind: Deployment metadata: name: my-app spec: replicas: 1 strategy: type: Recreate # Change to Recreate selector: matchLabels: app: my-app template: metadata: labels: app: my-app spec: containers: - name: my-app-container image: my-app:latest volumeMounts: - mountPath: /data name: my-volume volumes: - name: my-volume persistentVolumeClaim: claimName: my-pvc

- If you are using the

sol-csi-06

- Use

StatefulSetinstead ofDeployment.- The

StatefulSet's inherent design ensures ordered pod termination and startup, thereby eliminating the Multi-Attach error. Note that this solution might require additional PVC volumes.

- The

sol-csi-07

- Use NFS volume:

- For production workloads and services demanding seamless roll-out deployments and scaling, consider leveraging Network File System (NFS) volumes. These types of volumes inherently support multi-attach scenarios, allowing multiple pods to share a single volume without encountering the Multi-Attach error.